What is AI and ML

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are designed to think, learn, and problem-solve like humans. AI systems can perform tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, language translation, and more. Machine Learning (ML) is a subset of AI that focuses on the development of algorithms that allow computers to learn from and make decisions based on data. Instead of being explicitly programmed to perform a task, ML algorithms use statistical techniques to identify patterns in data, learn from those patterns, and make predictions or decisions without human intervention.

History and Evolution of AI and ML

The journey of Artificial Intelligence (AI) and Machine Learning (ML) spans several decades and has evolved through various phases:

1. Early Beginnings (1950s-1960s):

* 1950: Alan Turing introduces the "Turing Test" to assess machine intelligence.

* 1956: The term "Artificial Intelligence" is coined at the Dartmouth Conference.

* 1957: Frank Rosenblatt develops the Perceptron, an early neural network model.

2. The Rise of Expert Systems (1970s-1980s):

* 1970s: AI research progresses with logic-based systems and expert systems.

* 1980s: Machine Learning gains traction, but the "AI Winter" sets in due to limited computing power and funding.

3. Resurgence and Deep Learning (1990s-2000s):

* 1990s: AI resurfaces with advancements in probabilistic reasoning and ML algorithms.

* 1997: IBM's Deep Blue defeats chess champion Garry Kasparov.

* 2000s: AI evolves with improved computing power, paving the way for natural language processing and computer vision.

4. Modern AI and Deep Learning (2010s-Present):

* 2010s: Deep learning revolutionizes image and speech recognition, with companies investing heavily in AI.

* 2012: AlexNet wins ImageNet, showcasing the power of CNNs.

* 2016: AlphaGo beats world Go champion Lee Sedol.

* 2020s: AI dominates industries like healthcare, autonomous vehicles, and natural language processing, with advanced ML techniques like reinforcement learning and generative models.

Types of AI

AI can be categorized into three main types based on its capabilities

1. Narrow AI (Weak AI):

* Definition: AI systems that are designed to perform a specific task or a narrow range of tasks. They are highly

specialized and operate under a limited context.

* Examples: Virtual assistants like Siri and Alexa, recommendation systems on Netflix, image recognition

systems, etc.

* Applications: Specific tasks like speech recognition, image classification, recommendation systems, and

autonomous driving.

2. General AI (Strong AI):

* Definition: AI systems that possess the ability to understand, learn, and apply intelligence across a wide

range of tasks, similar to human cognitive abilities.

* Examples: As of now, General AI does not exist; it is a theoretical concept.

* Applications: If achieved, General AI would be capable of performing any intellectual task that a human can

do.

3. Superintelligent AI:

*Definition: A form of AI that surpasses human intelligence and capability in virtually every field, including

creativity, problem-solving, and emotional intelligence.

* Examples: This is a hypothetical concept and does not currently exist.

* Applications: Superintelligent AI could revolutionize fields like medicine, science, and technology, but it

also raises significant ethical and existential concerns.

Types of Machine Learning

ML can be broadly classified into four types:

1. Supervised Learning:

* Definition: Involves training an algorithm on a labeled dataset, where the correct output is known. The model

learns by comparing its output with the correct answers and adjusting accordingly.

* Examples: Spam detection in email, sentiment analysis, predictive analytics.

* Algorithms: Linear regression, logistic regression, decision trees, support vector machines (SVM), neural

networks.

2. Unsupervised Learning:

* Definition: Involves training an algorithm on an unlabeled dataset, where the output is unknown. The model

tries to find hidden patterns or intrinsic structures within the data.

* Examples: Market basket analysis, customer segmentation, anomaly detection.

* Algorithms: K-means clustering, hierarchical clustering, principal component analysis (PCA), autoencoders.

3. Semi-Supervised Learning:

Definition: A combination of supervised and unsupervised learning. The model is trained on a small amount of

labeled data and a large amount of unlabeled data.

*Examples: Image recognition tasks where only some images are labeled.

*Algorithms: Semi-supervised support vector machines, transductive SVM, generative models.

4. Reinforcement Learning:

* Definition: Involves training an agent to make a sequence of decisions by rewarding or punishing it based on

its actions. The goal is to maximize cumulative rewards.

*Examples: Robotics, gaming (like AlphaGo), autonomous vehicles.

*Algorithms: Q-learning, deep Q-networks (DQN), policy gradients, deep deterministic policy gradient (DDPG).

Why AI and ML?

1. Automation of Repetitive Tasks: AI and ML can automate mundane and repetitive tasks, freeing up human resources for more creative and complex work.

2. Data-Driven Decision Making: With the ability to analyze vast amounts of data quickly and accurately, AI and ML enable better decision-making in business, healthcare, finance, and more.

3. Personalization: AI and ML enable personalized experiences in various domains, from e-commerce to entertainment, by analyzing user behavior and preferences.

4. Improving Efficiency: AI systems can optimize processes in industries such as manufacturing, logistics, and supply chain, leading to reduced costs and improved efficiency.

5. Innovation in Healthcare: AI and ML are driving innovation in healthcare by improving diagnostics, drug discovery, personalized medicine, and patient care.

6. Economic Growth: AI and ML have the potential to contribute significantly to economic growth by creating new industries, improving productivity, and fostering innovation.

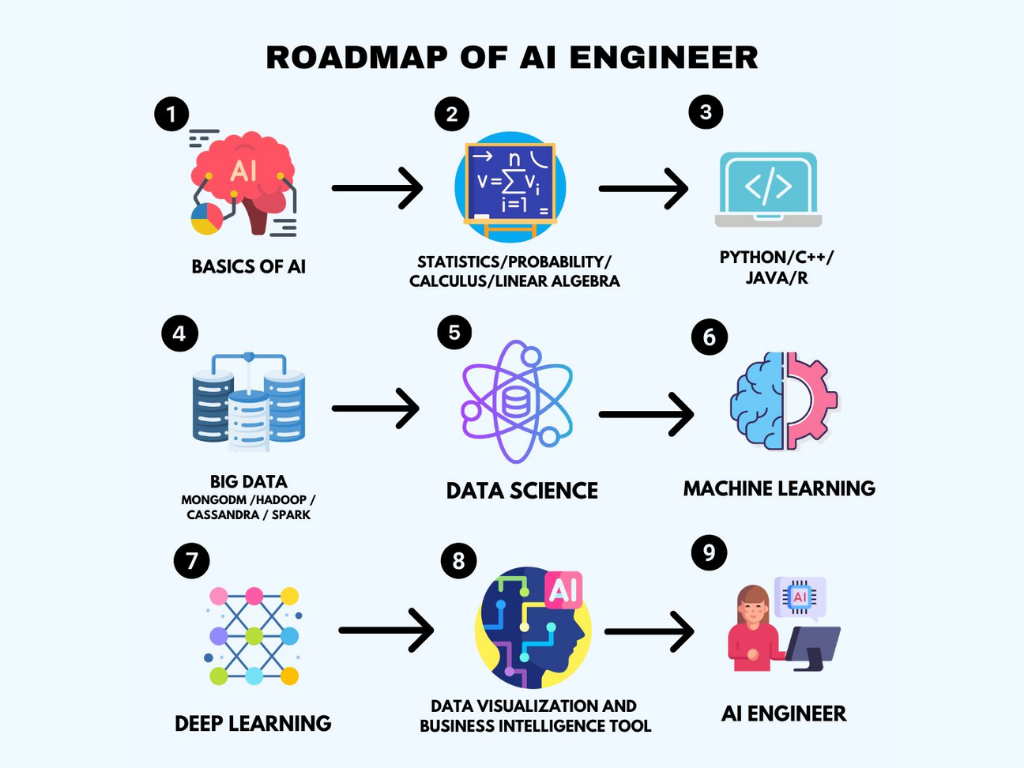

How to Learn AI and ML?

1. Understand the Basics:

* Start by understanding the basic concepts of AI, ML, and data science.

* Learn about different types of AI and ML, and the various algorithms and techniques used in the field.

2. Learn Programming:

* Proficiency in programming languages like Python, R, or Java is essential.

* Python is the most commonly used language in AI and ML due to its simplicity and the vast number of libraries

available.

3. Mathematics and Statistics:

* A strong foundation in mathematics, particularly in linear algebra, calculus, probability, and statistics, is

crucial for understanding ML algorithms.

* Topics like matrices, derivatives, integrals, probability distributions, and hypothesis testing are

particularly important.

4. Learn Key Libraries and Tools:

* Familiarize yourself with popular ML libraries and frameworks like TensorFlow, PyTorch, Scikit-Learn, Keras,

and Pandas.

* Learn how to use tools like Jupyter Notebook, Anaconda, and Git for version control.

5. Study ML Algorithms:

* Learn about various ML algorithms such as linear regression, decision trees, k-nearest neighbors (KNN), support

vector machines (SVM), and neural networks.

* Understand how these algorithms work, their applications, and how to implement them.

6. Work on Projects:

* Apply your knowledge by working on real-world projects. Start with simple projects like sentiment analysis,

image classification, or a recommendation system.

* Participate in Kaggle competitions to practice your skills and learn from other practitioners.

7. Learn About Data:

* Understanding data is critical in ML. Learn about data preprocessing, cleaning, and exploration.

* Familiarize yourself with concepts like data wrangling, feature engineering, and dimensionality reduction.

8. Deep Learning:

* Once you have a good grasp of ML, delve into deep learning, a subfield of ML that deals with neural networks

with multiple layers (deep neural networks).

* Study topics like convolutional neural networks (CNNs), recurrent neural networks (RNNs), generative

adversarial networks (GANs), and reinforcement learning.

9. AI Ethics and Bias:

* Learn about the ethical considerations in AI, including bias in AI models, data privacy, and the societal impact of AI technologies.

10. Stay Updated:

* The field of AI and ML is rapidly evolving. Keep yourself updated by following research papers, attending conferences, and joining AI/ML communities.

What to Learn in AI and ML?

1. Programming Languages:

* Python: The most popular language for AI/ML.

* R: Useful for statistical analysis.

* Java/C++: Used in large-scale AI systems.

2. Mathematics:

* Linear Algebra: Matrices, vectors, eigenvalues, and eigenvectors.

* Calculus: Differentiation, integration, and gradient descent.

* Probability and Statistics: Probability distributions, Bayes' theorem, hypothesis testing.

* Optimization: Convex optimization, optimization algorithms.

3. Machine Learning Algorithms:

* Supervised Learning: Linear regression, logistic regression, decision trees, random forests, support vector

machines.

* Unsupervised Learning: K-means clustering, hierarchical clustering, principal component analysis (PCA).

* Reinforcement Learning: Q-learning, deep Q-networks (DQN), policy gradients.

4. Deep Learning:

* Neural Networks: Basics of neural networks, activation functions, backpropagation.

* Convolutional Neural Networks (CNNs): Used in image recognition and computer vision.

* Recurrent Neural Networks (RNNs): Used in natural language processing and time series analysis.

* Generative Models: GANs, variational autoencoders (VAEs).

5. Data Preprocessing and Feature Engineering:

* Data Cleaning: Handling missing data, outlier detection, and data normalization.

* Feature Engineering: Creating new features, feature selection, and dimensionality reduction techniques like

PCA and t-SNE.

6. Model Evaluation and Tuning:

* Cross-Validation: Techniques like k-fold cross-validation.

* Hyperparameter Tuning: Grid search, random search, Bayesian optimization.

* Model Evaluation Metrics: Accuracy, precision, recall, F1-score, ROC-AUC.

7. AI Ethics and Responsible AI:

* Bias in AI: Understanding and mitigating bias in AI models.

* Data Privacy: Ensuring data privacy and security in AI applications.

* Explainable AI: Techniques for making AI models interpretable.

8. Tools and Frameworks:

* TensorFlow and PyTorch: Deep learning frameworks.

* Scikit-Learn: A machine learning library for Python.

* Keras: High-level neural networks API.

* Pandas and NumPy: Libraries for data manipulation and analysis.

9. Big Data Technologies:

* Hadoop and Spark: Frameworks for processing large datasets.

* SQL and NoSQL: Database management systems.

* Data Warehousing: Techniques for storing and managing large datasets.

10. Specialized Areas:

* Natural Language Processing (NLP): Techniques for processing and analyzing human language.

* Computer Vision: Techniques for analyzing and understanding visual data.

* Robotics: Application of AI in robotics for autonomous systems.

Roadmaps for AI/ML Learning

1. Beginner Level:

* Duration: 3-6 months.

* Focus: Basics of programming, introduction to AI/ML, basic ML algorithms, small projects.

* Resources: Online courses (Coursera, Udemy), tutorials, YouTube videos.

2. Intermediate Level:

* Duration: 6-12 months.

* Focus: Advanced ML algorithms, deep learning, mathematics for ML, larger projects.

* Resources: Books (e.g., "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow"), research

papers, intermediate courses.

3. Advanced Level:

* Duration: 12-24 months.

* Focus: Specializations (NLP, computer vision, reinforcement learning), research, contributing to open-source

projects, advanced mathematics.

* Resources: Research papers, advanced courses, specialized certifications.

4. Expert Level:

* Duration: 2+ years.

* Focus: Cutting-edge research, developing new algorithms, AI ethics, leading projects, innovation.

* Resources: PhD programs, conferences, journals, collaboration with industry experts.

Ethical Concerns and Challenges in AI

1. Bias and Fairness:

* Problem: AI systems can inherit biases from training data, leading to unfair or discriminatory decisions, especially in sensitive areas like hiring and criminal justice.

* Examples: Facial recognition systems showing higher error rates for people of color; biased hiring algorithms.

* Solution: Development of fairness metrics and techniques to identify and mitigate biases in AI systems.

2. Privacy Concerns:

* Problem: AI systems may infringe on privacy by collecting, analyzing, and exploiting personal data without adequate consent.

* Examples: Targeted advertising, surveillance systems, and data breaches.

* Solution: Implement stronger privacy protection laws (e.g., GDPR) and develop privacy-preserving techniques such as federated learning.

3. Job Displacement:

* Problem: Automation driven by AI and robotics is predicted to replace many jobs, especially those involving routine tasks, leading to economic disruption.

* Examples: Autonomous vehicles replacing truck drivers; AI-powered customer service chatbots replacing human staff.

* Solution: Encourage reskilling programs, universal basic income (UBI) proposals, and development of new job sectors that leverage human creativity and social intelligence.

4. Accountability and Transparency:

* Problem: AI systems, particularly deep learning models, can act as "black boxes," making it difficult to understand how decisions are made.

* Examples: Lack of transparency in medical diagnostics or autonomous driving decisions.

* Solution: Focus on explainable AI (XAI) to design interpretable models and create regulatory frameworks ensuring accountability.

5. AI in Warfare and Autonomous Weapons:

* Problem: The use of AI in military applications raises ethical concerns about unintended escalations and lack of human oversight in lethal decision-making.

* Examples: Autonomous drones and weapons systems making life-and-death decisions without human intervention.

* Solution: Calls for international treaties to regulate or ban the development and use of autonomous weapons.

6. Ethical Use in Healthcare:

* Problem: AI applications in healthcare pose challenges in balancing innovation with patient privacy, data security, and ensuring that AI recommendations are medically sound and ethical.

* Examples: AI used for predictive diagnostics or treatment recommendations may give incorrect advice or breach patient confidentiality.

* Solution: Regulatory bodies are increasingly focusing on AI in healthcare, with an emphasis on human-in-the-loop systems to validate AI-driven insights.

Conclusion

Learning AI and ML is a journey that requires dedication, continuous learning, and hands-on experience. Start with the basics, build a strong foundation, and gradually explore more advanced topics as you gain confidence and expertise.